More and more is spoken and written about the term “Big Data”. But what is behind it? Why are companies interested in collecting data and evaluating it specifically? How do you do that?

Anyone who wants to arm himself for the technological future cannot avoid the understanding of “Big Data”. In this article we present a simplified overview of the fragmented world of big data. But beforehand, what exactly is Big Data?

More information is created faster than organisations can make sense of it. (Jeff Jonas)

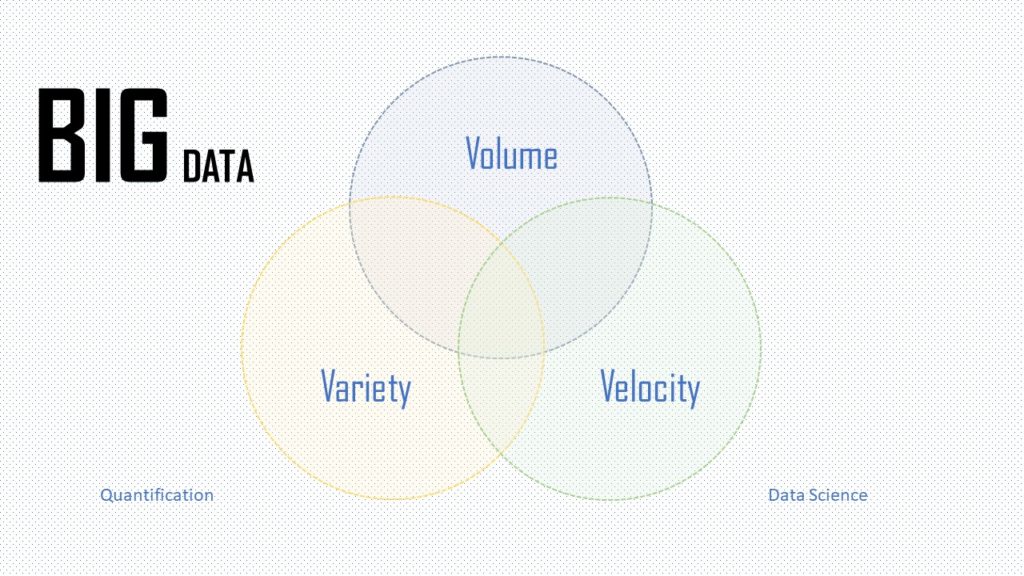

We like this definition very well: big data is when data is faster and larger in quantity than the companies can use. The idea of the non-controllable contained herein is in the right corner: while we used to hypothesize and, for example, target audiences before collecting “corresponding” data, today all accumulated data is stored without prior viewing and then analyzed.

Learn more about the top 5 big data analytics tools for 2018

Big data made simple

MapReduce

MapReduce is the Google Paper that started it all ( more details ). It is a model for writing distributed codes, inspired by some elements of functional programming. It’s a possible approach that was developed by Google, which has many technical advantages. The Google internal implementation is called MapReduce, and the open source implementation is called Hadoop. Amazon’s Hadoop instance is called Elastic MapReduce (EMR) and has plugins for many programming languages.

HDFS

HDFS is an implementation inspired by the Google File System (GFS) to archive large files across multiple machines. Hadoop processes data in the Hadoop Distributed File System (HDFS).

Apache Spark

Apache Spark is a platform that is gaining in popularity. It offers more flexibility compared to MapReduce, but at the same time more structure than a “message passing interface”. It relies on the concept of distributed data structures (known as RDDs). Further information .

MLIB and GraphX

The simplicity of Spark, which is based on a “message passing interface”, allows this big data framework to facilitate access to data for data scientists. The machine learning engine that builds on it is called MLIB and the graphic framework GraphX.

Pregel and Giraph

Pregel and the open source application Giraph are solutions to simultaneously analyze highly complex, social graphs and strongly connected data structures on many computers. It is noteworthy that Hadoop / MapReduce is not well suited to perform a graphical analysis and data visualization. On the other hand, HDFS / GFS will continue to be used as data storage.

Check out the top 10 free data visualization tools available in the market.

Zookeeper

Anyone writing cluster software today will most likely use ZooKeeper for coordination. ZooKeeper allows mapping of typical concurrency patterns (lock, counter, semaphore) across hundreds of servers. Hadoop uses ZooKeeper to implement fail-over of its most important system components.

Flume

Flume is an Apache project and aims to transport data from various sources (sources) in HDFS or files (sinks). In short: Flume a log collector, which in its newer version allows the possibility of correlation already in the transport channel. It does not matter what type of data is being transported, and what the source and destination are (if supported). Consistent use of the API allows developers to write and integrate their own sources and sinks. More information .

Scribe

Scribe is an open source project from Facebook. Similar to Flume, Scribe aims to make it easy to collect and analyze a lot of recorded data.

Google BigTable and HBase

Google BigTable and the open-source counterpart HBase was developed back in 2004 and is used in many popular applications, such as MapReduce, Google Maps, Google Books, YouTube or Google Earth. During development, great importance was attached to scalability and speed. This was made possible by the non-relational structure.

Hive and Pig

Hive and Pig are SQL Abstraction Language based on Hadoop. It is used to analyze tabular data in a distributed file system (think of a very, very large Excel spreadsheet so big that it does not fit on a machine). They both work on a data warehouse principle: data is simply stored unstructured and then not changed. The big advantage is that the data can be in different formats (plain text, compressed, binary). Hive and Pig are remotely comparable to a database: it requires a meta-information of a table and its columns, which are based on individual fields of the data to be evaluated. These fields must be assigned to specific data types. Interesting are Hive and Pig for business analysts, statisticians and SQL programmers.

It turns out, over time, that Hive and Pig slowed in their speed because they were put on Hadoop. To solve this problem, the developers went straight to HDFS, and the results were as follows: Google’s Dremel ( Dremel : Interactive Analysis of Web Scale Records), F1 (the H-distributed RDBMS, Google Ad Operations support , Facebooks Presto ( Presto | Distributed SQL Query Engine for Big Data), Apache Spark SQL (page on apache.org), Cloudera Impala (Cloudera Impala: Real-time queries in Apache Hadoop), Amazon Redshift, and so on. They all have a bit of semantics, but in the end it’s a relief for analyzing spreadsheet data spread across many data warehouses.

Mahout

Mahout (scalable machine learning “SML” and data mining: https://mahout.apache.org) plays a major role in the mass data processing ensemble. Mahout offers almost unlimited application possibilities from product recommendations based on areas of interest and statistical algorithms to fraud detection, probability analyzes or trend analyzes in social networks. Mahout uses a centralized data clustering algorithm that goes well beyond the scope of HDFS.

Oozie

In each process, most of the tasks take place at the same time and they keep repeating. In a highly complex system like Apache Hadoop, that would take a lot of time and money out of tackling each task individually. The solution is Oozie – a workflow manager. Ozzie manages and manages Apache Hadoop workflows and, to a limited extent, controls error handling such as restarting, reloading and stopping a process. Ozzie can perform scheduled actions as well as data-driven actions, such as when data is available infrequently and can not be processed by time-controlled batch processing.

Lucene

Lucene is open source and Java based. On the one hand, it creates an index of files that has about a quarter of the volume of indexed files. On the other hand, Lucene then provides search results with ranking lists, for which several search algorithms are available.

Sqoop

Sqoop is a connector between Hadoop and Relational Database Management System (RDBMS). It is used and supported by numerous database providers. Using Sqoop, Apache Hadoop can be easily integrated into existing BI solutions as a middleware application. The elegant thing about Sqoop is the possibility to integrate select statements already in database queries or during restoring. In addition to the connectors to the well-known RMDBS, there are also connectors to databases such as TerraData, Netezza, Microstrategy, Quest and Tableau. Further information.

Hue

Hue is a web-based graphical user interface to display parts and results of the applications mentioned above. Further information .

Learn more about the voice of customer tools and how they help in big data analytics

Thanks for sharing the descriptive information on Hadoop course. It’s really helpful to me since I’m taking Hadoop training. Keep doing the good work and if you are interested to know more on Hadoop, do check this Hadoop tutorial.https://www.youtube.com/watch?v=1OFFAr8zYEY